In most foundries, melt capacity is the big expense and overall limiting factor for production. Thermal analysis is a typical way to increase and maximize melting capacity without losing control of quality. Quality is achievable in different levels, based on the melting practice and the needs and demands of the customers.

Low-Level Quality — A very low level of required quality would be prevalent in foundries that melt everything iron, as provided by junkyards. Typically they would use inexpensive cupola melting and would not have a spectrometer lab. Some quality control for carbon content can be done by monitoring slag color, but silicon, manganese, chromium, copper, and other elements are free to wander all over the map. Here, a thermal analysis system would give the foundry a means to control silicon that could be adjusted by cupola additions, and possibly 75% FeSi additions to the ladle. Some additional information about hardening elements can be gleaned from a thermal analysis measurement of pearlite and carbides.

Moderate-Level Quality — A more typical, moderate required quality pertains to foundries that limit their incoming material to known scrap. Such foundries may have either electric or cupola melting and typically do not have problems with uncontrolled manganese, chromium or copper. For these foundries, carbon and silicon control is the main benefit of thermal analysis. A microstructure check on carbides and pearlite is less critical.

High-Level Quality — Foundries with the highest standard for quality will benefit greatly from thermal analysis, as well. These foundries have multiple levels of quality checks with cupola melting and holding furnaces, or electric melting furnaces, possibly with holding furnaces, and a full spectrometer lab with combustion analysis. Typically these foundries have one additional quality requirement—the chemistry must meet specific standards, limits and requirementsbefore the iron can be moved to the pouring line. This can add 10 -15 minutes to the melt cycle to wait for lab results. With the newer high-powered, medium-frequency furnaces, this delay can result in 20-25% lost melt capacity.

The secret here is that if the carbide stabilizers are reasonably consistent from heat to heat, then thermal analysis can evaluatethe other two elements—carbon and silicon—before the furnace gets up to temperature, is slagged, and ready to tap. The chemistrylab finishes its analysis and provides certification and feedback for the slowly changing carbide stabilizers. That extra +20% melt capacity alone could boost productivity an additional 10-15% per day and is worth a fortune to the foundry.

Thermal Analysis in Electric Melting

Here is how it works in electric melting: The furnace has an initial power setting (counts) programmed to raise the temperature up to between 2,550° to 2,600° F. The operator then takes a temperature measurement and calculates the remaining power needed to reach tap temperature. At the same time, the operator takes a thermal analysis sample. Within 1.5 to 2 minutes, carbon and silicon have been determined and the necessary additions calculated (MeltLab does this).

The operator then adds the corrections to a furnace still under power, ensuring that the additions are sucked under and quickly melted into the heat. By the time tap temperature is reached, the chemistry is corrected and the heat is ready to go. The lab sample can be taken either at the same time as the thermal analysis sample or after the additions are melted in. If the lab sample is taken with the thermal analysis sample, it is a check on the thermal analysis; if taken after the additions, it is a check on the final chemistry.

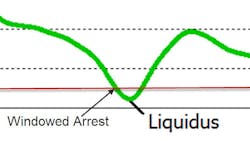

Thermal Analysis Accuracy — The last part of thermal analysis is accuracy. Not all thermal analysis instruments have the same degree of accuracy. Part of the accuracy is due to calibration, but much of it is due to the instrument itself. Inexpensive instruments have different ways of determining arrests. The early boxes used a technique called “windowing.” If the change in temperature over several seconds was less than a given value, that point was considered an arrest. This technique depended heavily on the amount of metal in the cup, and if any air was blowing on the cup. As you can see below, no two samples will have the same cooling rate for the liquidus, so setting an arbitrary cooling rate to call liquidus builds inaccuracy into the system. The more modern system finds the minimum cooling rate (green) for the liquidus arrest.

The liquidus is the determining measurement for Carbon Equivalent (C.E.) and is part of the calculation for carbon and silicon. The C.E. accuracy depends on the accuracy of the temperature calibration and variation in the chemistry of the wire used in the cups. All major manufacturers use wire that is +/- 2° F. MINCO, now part of Electro-Nite, did report the bias value of their wire on each box of cups, which allowed a 90% correction for any variation in the wire. It is not known whether that will continue with the merger of the two companies.

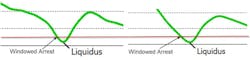

Eutectic Measurement —The same issues apply to the second arrest measurement. The windowing technique picks the arrest point very early in the eutectic,while the strongest point of the eutectic arrest is generally several degrees lower. This is the main cause of the inaccuracy of the earlier designed instruments.

Note the eutectic arrest: Green is the cooling rate, red the temperature curve. On the red curve, the arrow is about where the old instruments would record the eutectic. As seen, this results in about 5 to 10 degrees difference. Should the cooling rate of the sample change by the sample size or air movement around the cup, the window technique would yield different answers, while the derivative technique remains consistent from sample to sample.

The Next Step: Microstructure Analysis

At MeltLab, we remain dedicated to improving the art of thermal analysis in every way possible. For some time now universities and other researchers have been experimenting with the concept of being able to measure microstructure from thermal analysis.

Ductile Iron — Some companies have attempted to qualify ductile iron by combining time periods, speed of temperature rise, endothermic reactions and countless other parts of the curve into a complex algorithm, and are trying to correlate this with a ‘pass or no-pass’ decision for the iron. These systems are “custom fitted” to each operation over a period of several months. We believe that there are better, simpler and less costly solutions just by being able to get a better curve. We have developed new methods to measure nodularity and nodule count in ductile iron, as well as looking at the issue of shrinkage tendency of different irons.

Aluminum and High-Temperature Alloys — In aluminum and high-temperature alloys, we can measure the amount of energy in each phase that crystallizes and then estimate the microstructure of that sample. The critical factors have been a new smoothing method, close attention to noise filtering, and the use of innovative techniques such as higher order derivatives. At MeltLab, we work with customers to provide new and innovative applications of thermal analysis for any metal alloy: iron, steel, aluminum, sterling silver, copper-phosphorus, and even bismuth-tin alloys.

David Sparkman is the president and founder of MeltLab Systems, which includes the proprietary MeltLab™ thermal analysis system along with GSPC©, an integrated manufacturing graphic statistical process control system. Contact him at tel. 765-521-3181 [email protected].